Let’s ask AI how not to leave Canada’s AI industry behind

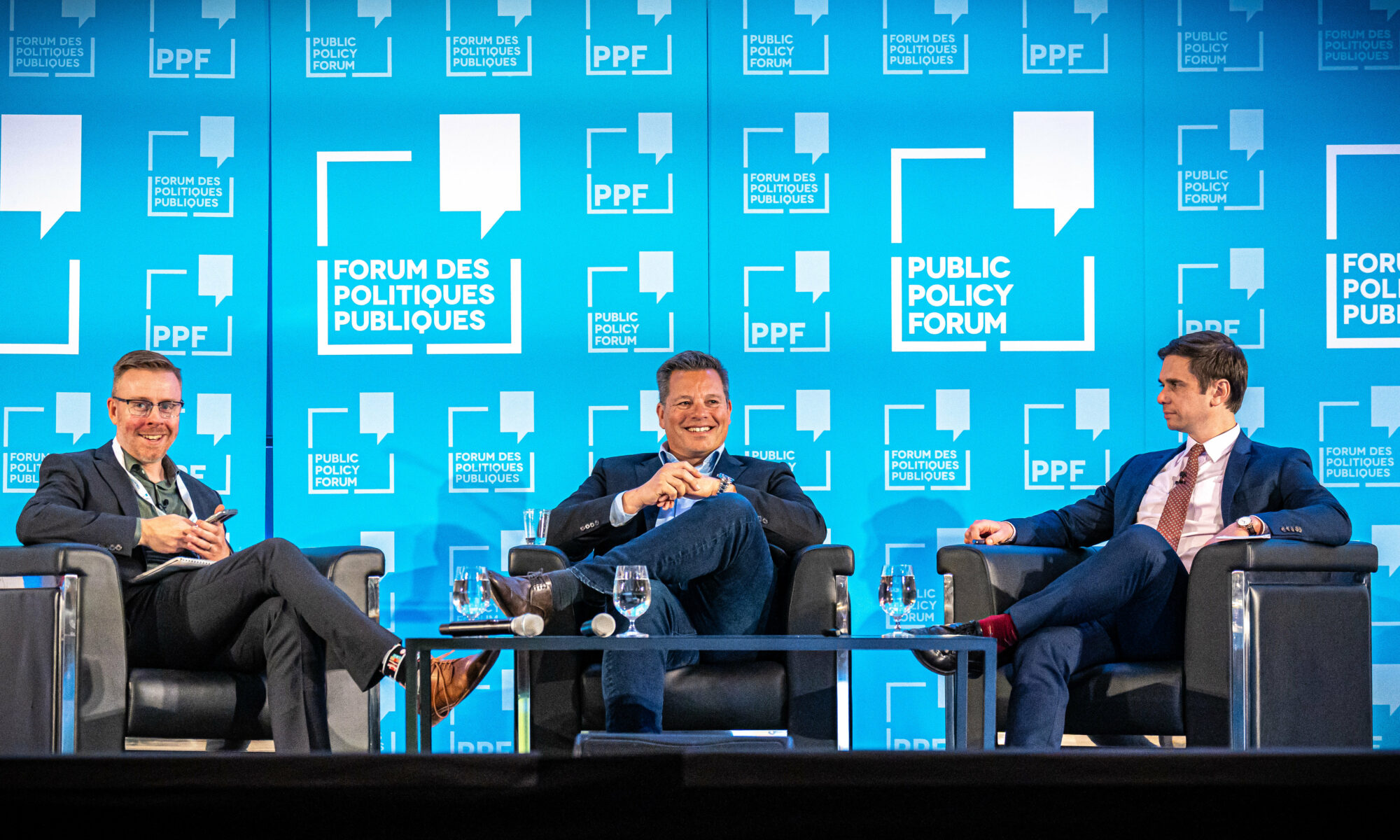

The following is a transcript of fireside chat with Chris Barry, President, Microsoft Canada, Owen Larter, Director of Public Policy, Office of Responsible AI, Microsoft, moderated by: Nick Taylor-Vaisey, Journalist, POLITICO Canada. It took place at Canada Growth Summit, April 28, 2023.

Nick Taylor-Vaisey, Journalist, POLITICO Canada: This is pre-recorded, so it’s all going to go super smooth.

Chris Barry, President, Microsoft Canada: That’s the truth. Including the typing at real speed.

NT: That’s right. So you ask a question, you get an answer, and it feels miraculous to those of us who are who are laypeople when it comes to the world of artificial intelligence. A robust answer just keeps going. Well, we sort of watched ChatGPT finish up its work, I’m going to introduce who’s on stage here. So our first speaker immediately to my left, has over 20 years of experience at Microsoft. He’s held several leadership roles, including chief operating officer for Microsoft’s Industry Solutions business. He’s also a member of the Tech Nation board of directors, but he’s also perhaps most importantly, the president of Microsoft Canada. Welcome to Chris Barry.

CB: Great to be here.

NT: And we’re also joined by an eight-year vet of the company. He started as a U.K. Government affairs manager before making the move to the greater Seattle area. He’s now director of public policy in the Office of Responsible AI at Microsoft, Owen Larter.

Owen Larter, Director of Public Policy, Office of Responsible AI, Microsoft: Thank you very much.

NT: I was kind of when I was reading about how you’re in Seattle, which is a logical place for you to work. All I could think about, and I’m sorry if this sounds uncultured, I could just think about Season 4 of Love is Blind, which is based in Seattle. Just finished it. It’s amazing.

OL: I’ve been hearing a lot about this. I haven’t seen it yet, sadly. So it’s obviously something to.

NT: But there’s an artificial intelligence joke in there somewhere. I’m not going to make it out of respect for our friends in reality TV.

NT: But let’s talk about artificial intelligence, substance, we’re past trivia and Love is Blind small talk. First question is, why does it seem like everybody in my life, and perhaps yours, was caught off guard by and excited and maybe a little nervous, but absolutely sort of rapt by the rise of chat bots, whether it’s ChatGPT or Bing or others that are emerging.

CB: Yeah. Nick, maybe I’ll start. You know, first, it’s great to be here with this group and great to participate in this event, including the dinner that we we hosted last night on many of these topics. You know, I think that what we have going on right now, we can draw almost a direct parallel to what happened 30 years ago this year, which was in 1993 and January, specifically the release of the first Mosaic Web browser. And for those of us that were sort of of age at that time to begin tinkering with the internet, well, the internet itself had been under development for years prior, led by work at DARPA and other universities around the world. The advent of the Mosaic browser is really what brought to the fore the portal by which people could first interact with the internet and that world of possibility was immediately opened. And while it’s probably fair to say at the time that very few people understood what a hypertext transfer protocol was, they did understand very quickly that if they typed HTTP forward slash forward slash colon www dot something dot com dot org, it would transport them magically to a new place. And it was simultaneously exhilarating and maddening and fraught with anxiety and possibility. Many of us will remember that the fastest internet speed at that time was 14.4 kilobytes per second, which is 14/1000 of 1,000th of the speed we get today at home if we have a gigabit service. And I think there’s there’s sort of a direct parallel there. You know, these these things have been around, but the usefulness, the immediacy is there. And while the technology set around things like generative AI and large language models is complex for sure, and these things take massive compute power, the ability for people in all walks of life to immediately ideate on how it is they may use them and be able to simply get into a text window in a browser and query it, ask a question, ask it to generate something that’s immediately graspable. And you know that in addition to its, you know, ready accessibility, I think, is what’s caused this sort of shift in the last 12 to 16 weeks as we sort of open up this year. So that that would be sort of my my take on it.

OL: Sure thought you put it very well, Chris. I agree with everything that you said. I would also sort of point to just the sort of really organic bottom up adoption of this technology. I mean, you use the phrase people being caught off guard. I think some people haven’t been caught off guard. I think a heck of a lot more people are actually going out and using this technology. ChatGPT, fastest ever consumer product to a million users, fastest ever consumer product to to 100 million users. This technology is is is the product of actually many decades of incremental improvements. And I think it’s now just reaching a point, as Chris elucidated, where it’s actually increasingly useful by people across society. I think the other thing that’s really helpful in my world, the public policy conversations, there have been lots of people talking about the risks and the challenges of AI for a number of years now and how you address them. I think what’s happened over the last few months is all of that has been mainstreamed. The use of the technology has been mainstreamed in the conversations around the opportunities and risks and how to balance them has been mainstreamed as well. And I think that’s that’s a really good thing in terms of making progress.

CB: Yeah. And just to add just a small dimension to what Owen was intimating towards ChatGPT hit 100 million users in two months time. You can correct me, but I believe Instagram took something like nearly five years to reach the same. And it’s something that we think is ubiquitous that almost all of us would have on our phone. You’re talking about a rate of adoption, which is just nearly vertical relative to some other technologies, which is, you know, I mean, it just, again, signifies the the interest.

NT: Are people justified in being a little nervous if they are about the fact that sort of the thin line between fascination and fear might be a fear mongering, but but a little bit of unease about a world that was human, that seems to be slightly less human because now ChatGPT is opening a whole new world? How do you from a public policy policy perspective, how do you talk about that with people?

OL: No, it’s a great question. You know, should people be nervous? Should people be fearful? It’s a question we get asked a lot. I think there is a lot of uncertainty and there’s a lot of change that is happening and that always brings uncertainty. I’m not sure just being fearful alone is that helpful? I think people should definitely be aware of the technology, aware of the opportunities, aware of the challenges. And then I think we all need to take steps as a society to put in guardrails so that we can realize the benefits in a responsible way. And again, this is where I’m sort of I’m quite heartened by the development of the conversation over the last few months. I’ve been in the the responsible AI team at Microsoft for about three years now, and I honestly think I’ve seen more change and more progress in the last three months in the public conversation around AI than in my rest of the time in the team. And I think that’s really helpful and is the sort of three main trends that I think are identified, which I think are all quite heartening. One is around adoption. We talked about ChatGPT, I think that’s really helpful. You can see it with our customers using our Azure Open AI service. So this is where we’re providing enterprise grade access to the open AI models. And Chris is obviously an expert on this and I’m sure can can say more. We’ve seen month on month a 10x increase in people using this service. So there is just a huge amount of excitement, both bottom up and organically on the ChatGPT and the new Bing side and then also from enterprise customers as well. So I think the adoption tells you something that people are finding value in this technology. I think what you’re also seeing is an acceleration of public policy conversations around the world. So countries right around the world now trying to work out what the right regulatory guardrails for this technology is. And I think that’s that’s that’s really, really helpful. I think you’re starting to see an international aspect to that conversation as well with countries realising that this is international technology that is developed and used across borders. And so when you’re building regulation, it makes a lot of sense to make sure that that regulation is interoperable across across borders. That’s not to say every country should have identical regulation, but core concepts should be interoperable. And I do think Canada is right at the front of this global conversation. The discussion on the AI and Data Act, I think people around the world are looking at what is going on here, seeing as a really good starting point, a really good philosophy around the AI and Data Act, and that there’s a there’s a conversation to be paid attention to in Canada. That the last point I’ll make where I think there’s been really positive progress really over the last six months, I think this is acceleration is accelerating as well is on standards and frameworks and tools that we can use right now to use AI in a more responsible way. So regulation will take a little while to come into place, but there are ISO standards now around things like risk management, building AI management systems. There is a a framework. The next risk management framework in the U.S. sets up a template for how any company can put together an AI governance framework to address the risks of of AI. Big progress on the sort of tooling on the responsible AI side so that developers can better understand and address challenges in their models. So I think really good progress across all of those different areas that I’ve mentioned there as well is what gives me a lot of hope.

CB: And maybe if I could just add on to that in terms of things that might be unsettling during this time. You know, certainly there’s been any number of press and conversations within parts of it, like how disruptive is this in terms of things like employment, right? And it’s a double edged sword. I mean, on the one hand, there are significant productivity enhancements that can be gained by the set of technologies. We have a service built into our development platform, you know, a company we acquired seven years ago, GitHub, we’ve introduced a co-pilot concept which allows developers to code with these models. And what we’re finding is in them, on the average they’re saving they’re 45% more productive, which is a staggering figure when you think about the expense of a developer. And so at one level you might say, well, gosh, that’s that’s terrifying. Or maybe it’s great if you’re, you own a company, you can fire half the developers, but that’s not the point. The point is, in addition, there are about 75% more satisfied with their jobs. Why? Because they’re getting the focus on higher order problems and the things rather than doing the drudgery of debugging, of compiling libraries and that type of thing, they can focus on higher order problems and we will see this play out as a set of technologies is adopted and there will be moments that are disquieting. There’s going to be massive opportunity as well. Sure.

NT: I know we want to talk about regulation and expand on the opportunities. Before we get there, you work for a company that is innovating very quickly on this. And when you’re talking about risk, sort of the protocols in place to manage that risk. As you innovate not just chat bots but the sector more broadly, what kinds of risks are there and how are you managing them at kind of like lightning speed it feels like?

OL: Yeah, it’s a great question and I think it is a really important part of the conversation. I don’t think we want to give the impression at Microsoft that we don’t appreciate that there are risks, that I do think there is an immense opportunity and there has to be a balance threat, but for sure there are risks and I break them down in a few ways. So I think firstly, I think we need to make sure that we’re developing and deploying AI in a way that is responsible and ethical. I think one thing that comes to mind is the way in which AI is being increasingly used right across society to take material, consequential decisions about individuals. Does someone get access to credit? Does someone get into a university? And it just simply cannot be the case that a system that is being used to support a decision like that might be discriminatory or biased in some way. So I think that is a real risk that needs to be addressed. And I also think that there is a sort of geopolitical elements to this conversation. I think we need to make sure that we’re advancing AI in a way that supports the economic competitiveness and the sovereignty and national security of democratic countries in particular. I think that’s something that we need to give a lot of attention to. And then I would build on the point that Chris made. I think, you know, this this will be a period of real change. There is real opportunity but I think we need to be particularly thoughtful about some very sensitive aspects of society. So the education space, for example, what will the changes for the world of work mean to those of us that are working at the moment? And how do we help guide those changes in the right direction so that AI is beneficial broadly across society, not just to a small a small group.

NT: OK, let’s talk about the legislation. So there is legislation on the table right now, Bill C27, which is about more than artificial intelligence, but is certainly there’s a big chunk of it about AI. But, wondering if you can talk about what problem that bill may be solving or should solve and what opportunities it may unlock. And then we can talk about maybe a little bit of the reality of what the bill does accomplish and and how that actual debate is playing out. But what can legislation do? What can it accomplish?

OL: Yeah, I think it makes total sense. I think I’d zoom out a little bit. I think legislation and regulation is going to play a really important role. But I think really this is a sort of broad societal conversation around how you create new institutional frameworks for a completely transformative technology. So I think regulation is needed for sure. You need new rules to guide new technology. Government is going to have to play a leading role here, just as it has right across history in leading the charge, putting the rules in place for new transformative technology. Governments going to have to build infrastructure, and it’s going to it’s going to have to make make the rules. I do think the AI and Data Act, again, as I alluded to, my my first comment is a really good starting point. I think the philosophy of the AI and Data Act, which is to focus on flexible processes for risk identification and mitigation, which are able to stretch to the breadth of the AI ecosystem and are able to keep pace with the technology as it develops because it’s going to continue to develop quickly. I think that’s a really important core part of the philosophy in the AI and Data Act that I think we’re very supportive of. I think some of the conversation that has been around data is with things moving so quickly, how do you make sure that the really sensible intent and philosophy around the bill is properly captured with the language in the bill so everyone knows what the frame is going to look like and what they’re going to be subject to, and that we can all sort of debate it, in the meantime. I think government has a really, really important role to play and to lead. I would call out just a couple of other sectors of society that I think that I think are also important. So industry clearly has a really important responsibility I think to step up, we’ll need to follow the rules when they’re made and of course we’ll do that. I think we also need to take steps to demonstrate that we are trustworthy and continue to take steps to demonstrate that we are trustworthy. So we’ve put a lot of work into our internal responsible AI program. We’ve been building that out for for six years now. We are trying to do more in sharing that information externally. So we have our responsible AI standard, which is a set of requirements that any team at Microsoft that is developing and deploying AI has to abide, it has to abide by. We’ve published that now. So if you want to go and check it out and learn more, Microsoft Responsible AI Standard, that’s now a public document. We’ve also published our AI impact assessment as well. So any team at Microsoft that’s developing and deploying AI has to has to put together an impact assessment. And we do this to sort of a.) Show that we’re walking the walk, quite frankly, not just saying nice words around responsible AI, but also hope that others can build on the work that we’re doing. The final, final point I’ll make in terms of this sort of broader societal framework and shift that I think we need to be mindful of is is civil society and academia has a really, really important role to play here. These are very complicated, often technical conversations that are going to impact the breadth of society. We need civil society and academia to help chart a way forward to identify the opportunities and challenges and also, quite frankly, to to provide scrutiny, to scrutinise the technology, scrutinise the companies developing and using the technology. So they’re going to play a really important role as well.

CB: And I think I might just add that, you know, to be clear, we at Microsoft, we, we we support the regulation of AI globally. We absolutely do. And we think it’s great that Canada is taking a leadership position on that journey with with AIDA. The the tension point that I think Owen was getting at is sort of what’s in the bill itself versus what is what is left to be relied upon in regulation which is subsequently developed. And what’s the balance between that in terms of specificity that can move at the pace at which this technology is evolving and this groundswell of demand but doesn’t doesn’t become an impediment to innovation leadership opportunity, particularly in Canada, which is a world leader in AI technology. And so what we don’t want to you know, it’s a careful balance. We we absolutely support regulation. These are powerful tools. We don’t, and you know, the bill as drafted and I’m not a total expert there’s there are criminal penalties and you’ve got to strike that right balance so that, you know the bill is specific enough that players in the ecosystem broadly know how to comport themselves. Yeah, and that’s I know that’s obviously being worked on right now.

NT: Sort of what you phrased as a question was actually the question I wanted to put to both of you, which is what is that balance? And with so many stakeholders who who want to and deserve to be a part of the conversation and a government that has legislation that leaves many of the details to regulation, how do you not end up with a law that falls hopelessly behind the technology or has some sort of gap in its enforcement or something that is unforeseen? What’s how do you strike that balance? Yeah, you’re not elected politician, so I’m not putting you on a spot to campaign on.

CB: Definitely not that.

OL: No, but it is. Look, it’s a big problem that we all have to solve. And certainly the public policy team working on issues, this is something that we feel we have to make a meaningful contribution to. It’s something we need to get right as a as a society. I think there are some important first steps to be taken. And I would agree with Chris. We definitely feel that there is a need for a regulatory framework and we feel the conversation in Canada is actually really, really positive and productive. And so that’s why we want to lean into it. A few points that are a bit more concrete. I think you need to identify the particular harms in the here and now that you can address. There are AI systems again making consequential decisions, as I mentioned to that, that are relatively well understood and are increasingly being used across society. So how do you make sure that when AI is being used in what you might refer to as a high-risk domain, to take a consequential decision that there is regulation around that? That’s something that we understand a bit more. I think that you also need to make sure that this regulation is durable, as you mentioned, both durable and flexible, because if you think about the breadth of the AI ecosystem as a whole AI is not just one thing. There are a whole load of different types of systems that are being deployed in a whole load of different scenarios across pretty much every sector of society. So how do you regulate that? It’s pretty challenging, but I think what you can do is do actually what the sort of the philosophy of AIDA, I would say, is to focus on flexible processes around risk management. How do you go through a process of identifying the risk of your particular use case and mitigating those challenges? So I think focusing on those processes will be really helpful. I think finally, because of the speed element, because of the need to be nimble and agile and and keep up with the development of the technology, relying on some of the other sort of tools in the regulatory toolkit I think will be really helpful. I’m thinking about things like standards again. So the ISO standards, I think there’s there’s great work that has been been going on on that front for a number of years now in the background. And I think it’s it’s really helpful to be able to draw on that. So there are more standards being developed on risk management tools that are most on edge being developed on evaluation and measurement. We’ll have to build those out. And then these these templates, I’ll mention it again because I think it’s a really important contribution to the conversation. The NIST AI risk management framework. This is this is good to go. This had been published a couple of months ago. This is from NIST. They’ve also created really helpful frameworks on the privacy front and the cybersecurity front and this risk management framework companies can use now to start to build out their own governance framework.

NT: OK, So we’ve talked about regulation. Let’s talk about other opportunities. When you think about the impact that AI can have on the workforce growth in Canada, what comes to mind as you’re looking at the work your company is doing looking at the sector?

CB: Yeah, I mean, the opportunity here is, is incredibly vast. And, you know, we we at Microsoft are obviously on a mission to make these tools readily available to people throughout society. I mean, and so, you know, at a product level in terms of products that we we create, you know, we’ve announced that we will be infusing our office products essentially with the co-pilot capability. So it will be able to author with you and it will be able to analyse data with you in Excel and things of that nature. And and that’s great. And so there’s there’s the broadly applicable piece. There’s also what we’re already seeing happen as in the early stages of this unfolding. And so, you know, maybe two examples I’d point to you look at the city of Kelowna in British Columbia. They have a they have a trial going, leveraging the capability of of, you know, ChatGPT and the open AI technologies to essentially create a bot that can, you know, evaluate building permits for homes and be able to do it at a level of specificity to look at variances in code variances and all of that minutia that a city planner would normally spend days, months, weeks vetting that can be reduced down into literally minutes, mere minutes. And again, back to my prior point, this isn’t about let’s get rid of the city planners of Kelowna who are already strapped for resources, but rather how do we make them more effective and how do we, in a sense, over time, turn this that city office into becoming open 24 by seven? Right. And that’s a tiny example. But they will they will scale that out and bring it into production soon. And my understanding is the city of Vancouver is looking at something similar and who knows where to go from there. But I think that’s just a very tangible example. Another that I’d point to and I’m looking around the room for, Dr. Amrit, are you here? Raise your hand if you’re here. He was here. There he is. So he was part of our experts dinner last night. Dr. Sidev is a physician, as is his wife who’s an optometrist, and he was explaining to us that in Canada there is an acute need to increase the capacity to do diagnostics using retinal scans that, you know, a retinal scan is very informative about detecting early diabetes, high blood pressure and a number of other maladies. And yet the University of Waterloo is the only school in this country that mints new optometrists every year, somewhere between 20 and 22 per year for the entire nation. And we have this massive capacity gap and using computer vision technologies, amongst others, these scans can be digitised and automated and those diagnoses can be made much quicker. And so I think it’s a very tangible example of how you compress that early intervention, the time to, you know, get people properly diagnosed using a very non-invasive set of technology, no blood tests and nothing like that. I hope that did justice to your to your anecdote. Give me a thumbs up. Thumbs up. But I thought that was just a very impactful example about the promise, the potentiality for this type of technologies. And, you know, the list is endless, but those are two that have been resonating, right?

OL: I agree. And just just to build on what Chris was saying, like, I think, you know, the risks of this technology, that they’re real. We’ve talked about them, but the opportunity is is so vast. I thought Chris gave a really good overview of it, just a few of the things that I’m really excited about that the way in which generative air is already being used to really drive some really impressive scientific breakthroughs. So you’re already seeing research into how you can use generative AI to to generate net new medicines and therapies. This is going on now. New high performance materials going to be completely transformative right across society. One of the things it’s a bit wonkish, but I’m quite excited about is how you can use AI to better measure and understand complicated systems. So I think about this in the public policy world, how can you how can you better understand the impact of the policy that you’re putting together? Some really interesting research that’s been done using satellite imagery to better understand and predict where economic growth is occurring just by looking at satellite data, I think we’ll see much more of this. We have a much better understanding of how the economy is working, how society is working. We might even be able to predict the weather accurately at some point in the future. You never know. So I’m excited about that as well. One of things I’m actually most excited about, which sounds really mundane, but I think will be really helpful for everyone. Chris talked a little bit about Microsoft 365 and the way we’re bringing AI to our office products. We’ve already been able to play around a little bit with this. There is going to be a day soon where you can just tap into your chat bot. Can you make sure that the background on slide six is the same colour as the background on slide two, can you, can you format the document so that these…Owen Yeah, exactly. There you go. It’s coming, it’s coming. Can you format the document so these bullets actually line up, you know, stuff like that. And it’s really mundane. I think everyone would agree it’s, it’s fairly low risk. It’s going to be really transformative and really helpful for all of us. So I’m I’m sort of really, really excited about this. Quite small, but I actually think very helpful adjustments or improvements as well.

NT: Perfectionist dream.

OL: Yes. There you go.

NT: This is a room of perfectionism. Right?

OL: Right.

NT: You know, we joked when we had a call before this that this would be a fast 30 minutes and it’s been 29 minutes and 57 seconds. That flew by right of time. So thank you, Chris. Thank you Owen. And thank you, everybody, for listening.

CB: Let’s thanks for having us.